Intelligent mobile robot

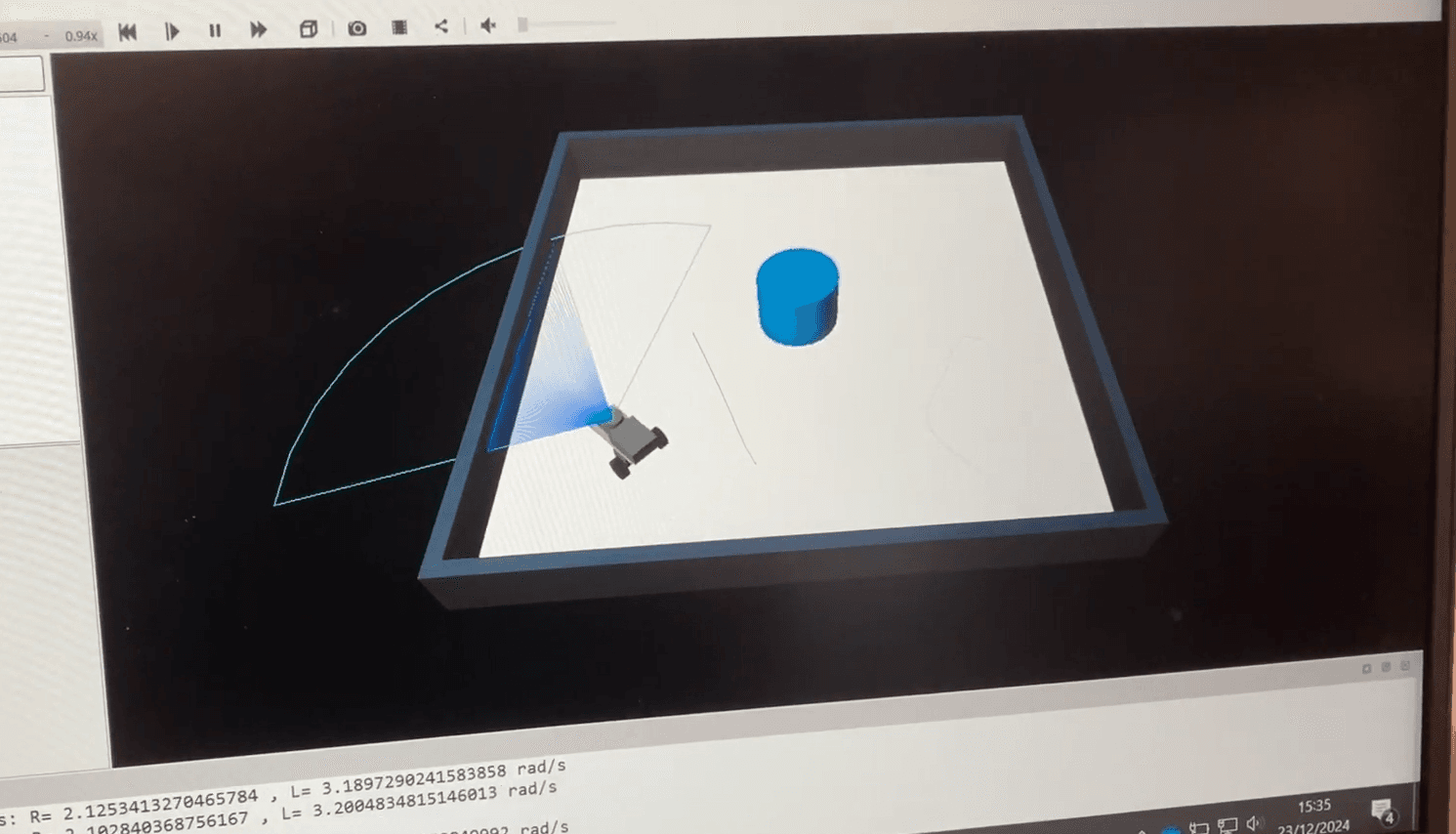

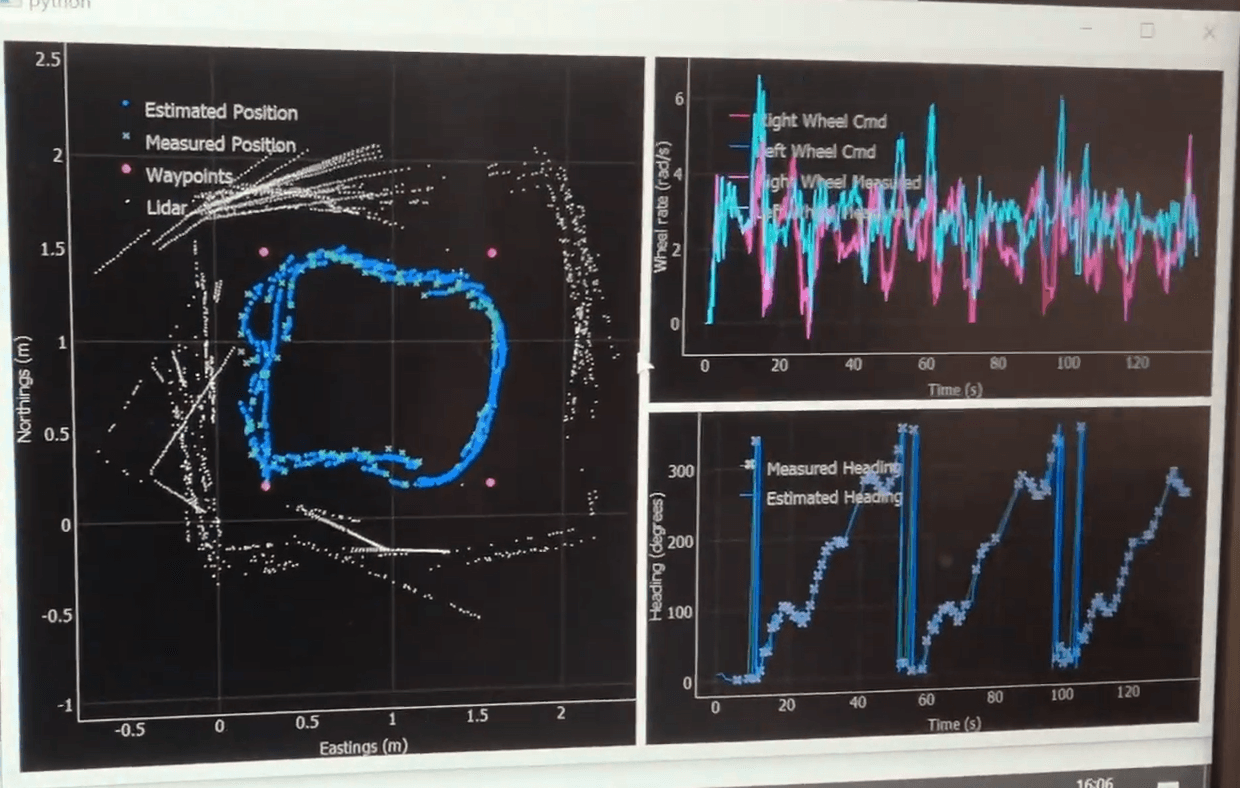

Project overview: As part of a collaborative team project, I contributed extensively to building a fully autonomous mobile robot control system capable of navigating an indoor arena relying solely on lidar sensor data. Our work focused on developing and refining the underlying control and localisation algorithms from first principles, with a primary goal of achieving accurate pose estimation and reliable path following without GPS or external positioning information.

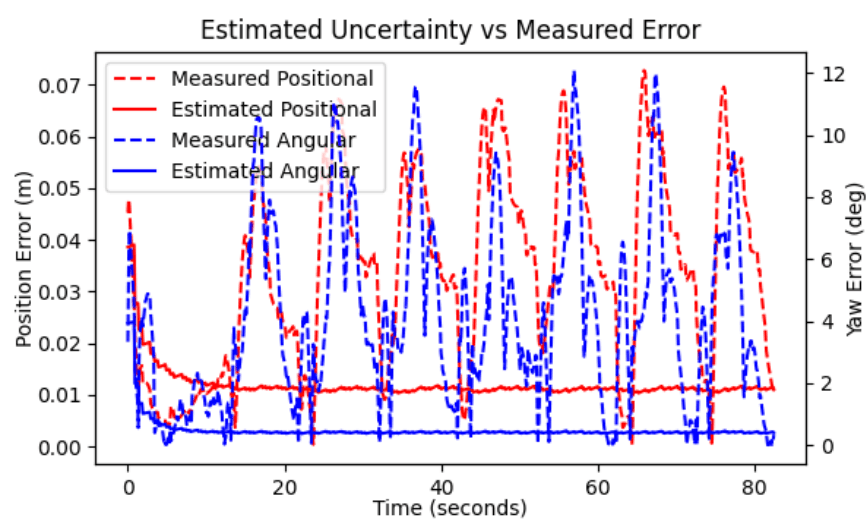

A central challenge was sensor fusion, which I addressed through the implementation of an Extended Kalman Filter (EKF) combining wheel odometry and visual landmark pose estimates derived from Aruco fiducial markers detected via lidar point clouds. I rigorously analysed sensor noise characteristics by experimentally capturing static and dynamic datasets, observing Gaussian distributed errors in Aruco positional and angular measurements as well as systematic variance in wheel encoder data. These insights informed initial noise covariance models critical for EKF parameterisation.

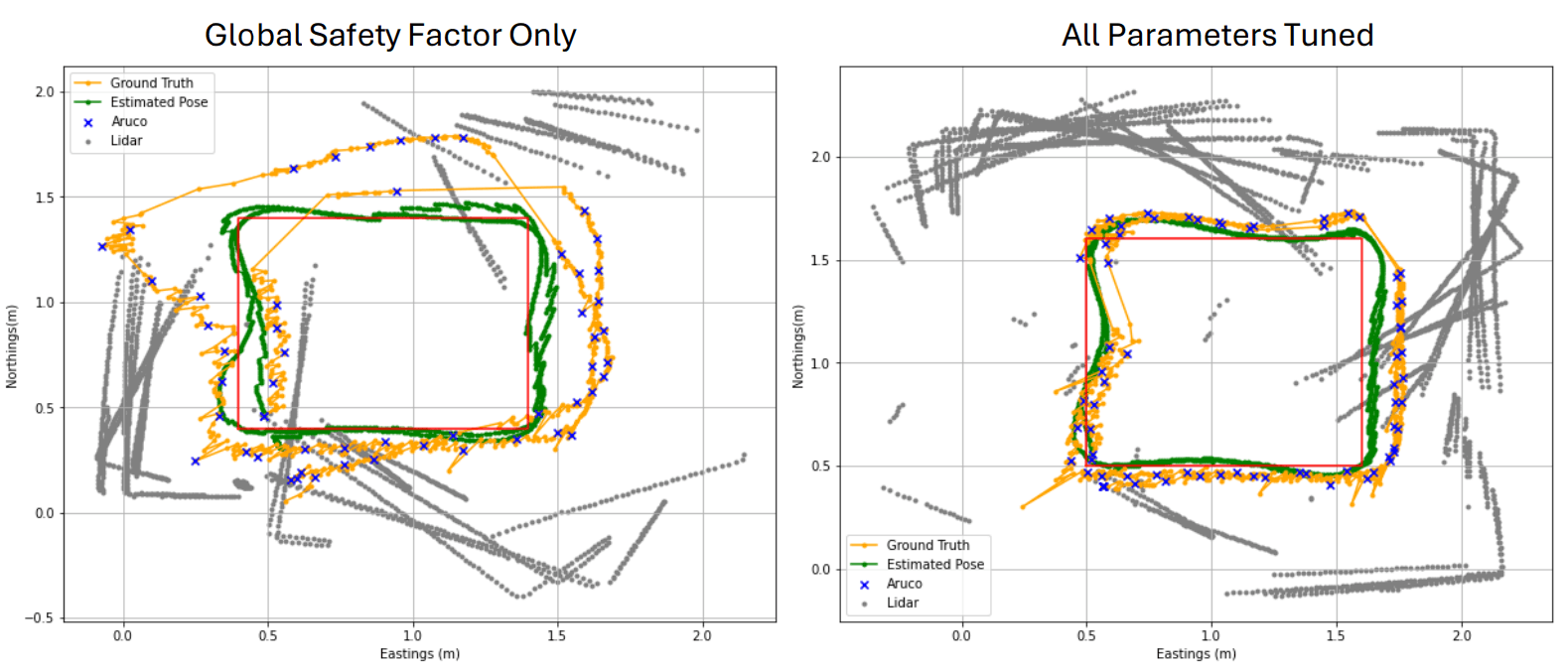

Parameter tuning was a vital iterative process. I employed simulation in Webots, combined with ground truth comparisons, to scale and optimise uncertainty estimates systematically. Tests revealed that a global scale factor of 3 multiplied by experimentally measured noise values provided the best trade-off between responsiveness to landmark updates and prediction stability. The EKF demonstrated robust, low drift localisation across consecutive laps of the arena, maintaining an average position error of 0.08 m and heading error of 8.2 degrees.

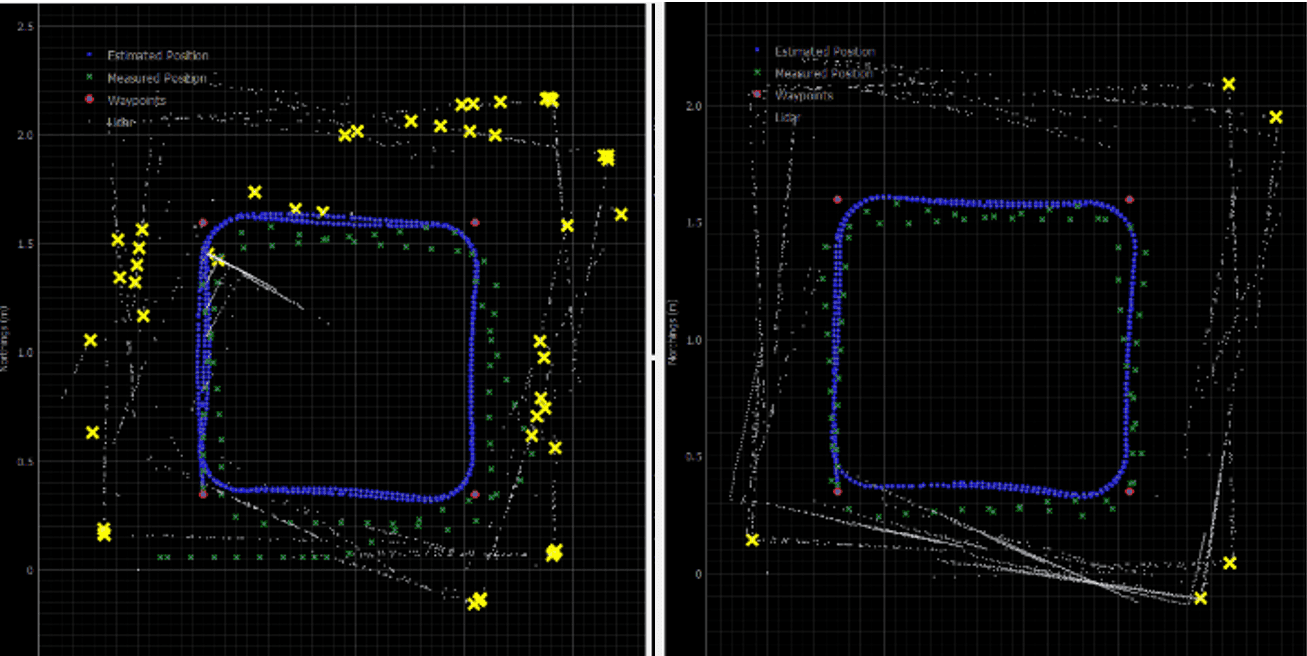

To address EKF limitations in more complex and ambiguous environments, I also implemented and tested a Particle Filter (PF) localisation module capable of maintaining multiple pose hypotheses. The PF employed a predictive motion model injecting stochastic process noise and an observation step weighting particles based on sensor likelihoods. To improve real-world applicability, I introduced artificial Gaussian noise to simulated Aruco measurements, enhancing robustness against sensor imperfections. Test runs under tuned parameters confirmed the PF’s greater resilience to non-Gaussian uncertainty and capability to recover from tracking failures, albeit at higher computational cost.

Adding to localisation, I developed a landmark detection module focused on identifying corner features from lidar scans. Starting with curvature-based analysis, I refined detection via a consistency filter verifying repeated observations to minimize false positives. Due to real sensor data degradation, I adapted the method to a RANSAC line-fitting approach for improved robustness against occlusion and noise, despite reduced reliability in extreme scan conditions.

Furthermore, I contributed to the implementation of Particle SLAM integrating Gaussian Process Regression (GPR) to generate featureless, probabilistic maps of the arena. This approach allowed for mapping and localisation without explicit prior environment knowledge, demonstrating adaptability to novel or unstructured indoor spaces. To enhance efficiency, LIDAR point clouds were downsampled and filtered before incremental map updates at runtime.

Real-world deployment was preceded by extensive simulation validation with Webots, ensuring that parameter tuning, algorithmic changes, and sensor fusion strategies translated effectively onto the physical robot. Throughout physical testing, speeds were moderated during turning manoeuvres to guarantee sufficient lidar data density and improve map fidelity, acknowledging the system’s sensitivity to sensor noise and environmental complexity.

Overall this is the kind of project I like: hands-on hardware, fast iteration, and a clear path to deeper Physical AI work without needing a seven-figure lab.