SO-Arm + LeRobot (VR training)

Project overview: I built and trained a low-cost SO-100 robotic arm using LeRobot, with the goal of creating a reliable ‘launchpad’ for longer-horizon Physical AI experiments. The emphasis here wasn’t chasing a perfect benchmark, but proving an end-to-end pipeline I can iterate on: hardware, data capture, teleoperation UX, training, and evaluation.

- Printed and assembled a SO-100 arm.

- Set up LeRobot on a £60 Linux PC (eBay special) to run the local control + data pipeline.

- Implemented VR headset control so I can teleoperate and collect data without a second physical arm (button mapping, controller modes, and safety-friendly UX).

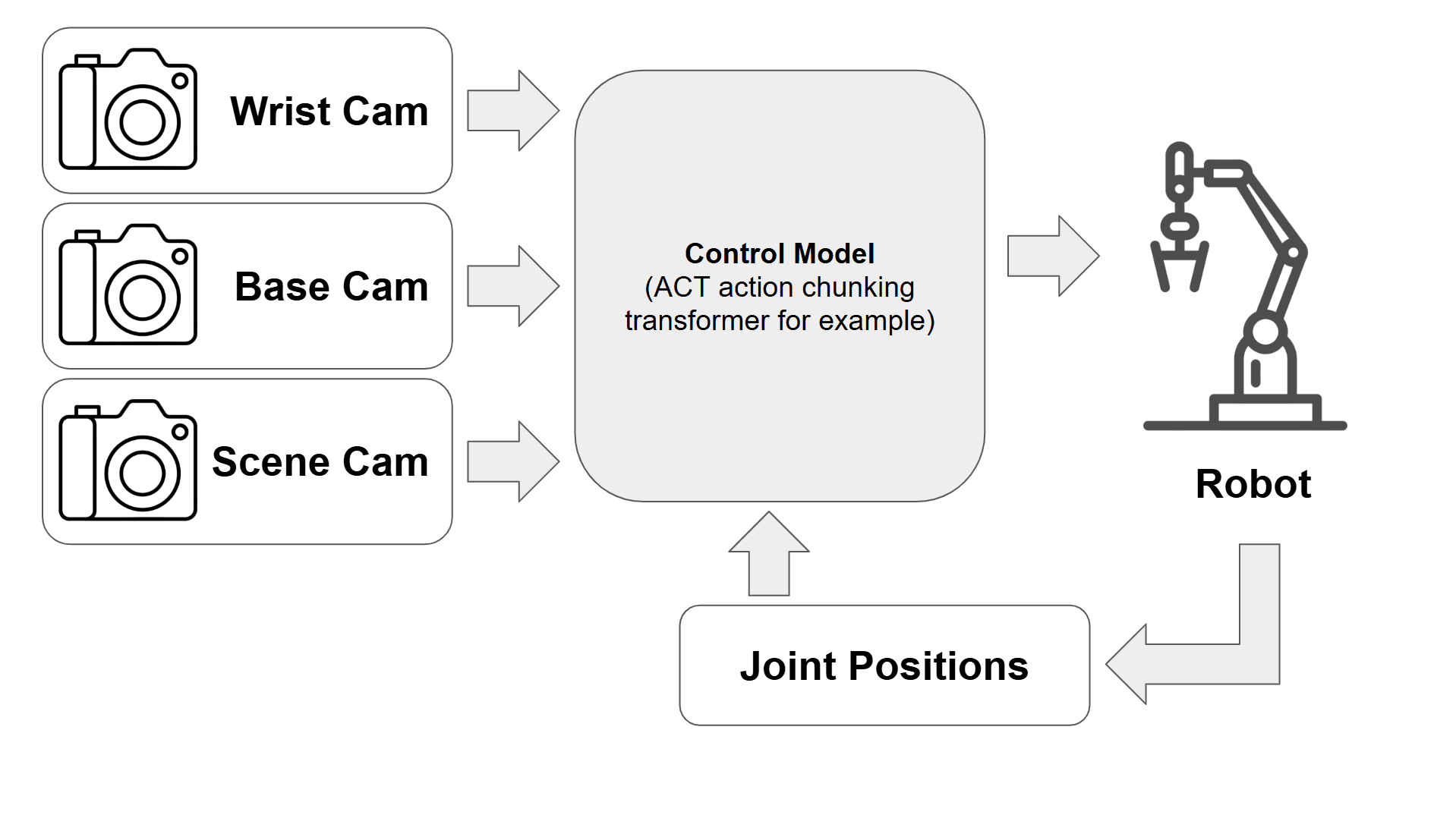

- Collected training data that included joint angles and video feeds from 3 cameras.

- Trained an ACT policy on a Runpod RTX 4090 instance for under $1.

- Trained the robot to pick up a small green roll on deoderant and place it on top of a red powerbank; ~50% success on the task from ~50 episodes of training data.

This was the very first test run, fully autonomous and trained from roughly 15 minutes of training data!

Setup: The current setup is intentionally simple: a compact arm workspace, 3 fixed cameras, and a cheap always-on Linux box to keep the development loop tight. This kept integration friction low and made it easy to re-run data collection and training experiments quickly.

VR control bridge: To allow the vr headset (Oculus quest 1) to provide control input to the arm, I created a small localhost web app as a WebSocket bridge: the VR headset/controller streams actions to the bridge, and the LeRobot training script subscribes to the same stream for synchronous action logging. It’s lightweight, debuggable, and makes it easy to control the arm without having to build a second physical teleoperator.

TODO: The current results are a solid proof-of-pipeline, but the interesting part is raising the ceiling: success rate, generalisation, and task complexity. Most improvements come down to better demonstrations and tighter experimental control (in a fun way, not a lab-coat way). Id also like to try different model architectures. THe proof of concept uses an ACT (Action chunking transformer) model (easy and fast to train), but there are many alternatives that may work better for this setup e.g. a VLA (vision language action) model which have been shown to be able to generalise better.

- Cleaner training environment (less visual clutter) or deliberately structured clutter (but consistent).

- More consistent lighting (or purposefully varied lighting, but with enough coverage in the dataset).

- More demonstrations and higher-quality demonstrations: smoother trajectories, fewer hesitations, and better coverage of edge cases.

- Better camera consistency: fixed exposure/white balance and repeatable camera poses.

- Try alternative policy families and training recipes that may generalise better than the ACT run I used (and compare with clear metrics).

Scaling to richer tasks: Once the pipeline is stable, I can move beyond single pick-and-place and into longer-horizon tasks where planning and recovery matter. A nice next step is building tasks with a simple ‘spec’ (colors, bins, counts) so I can measure generalisation rather than memorisation.

- Sorting: place red bricks in one box and blue bricks in another (variable starting positions).

- Multi-stage manipulation: pick → place → re-grasp → align (introduce intermediate success criteria).

- Robustness: start from slightly perturbed arm poses and object positions; evaluate recovery behavior.

- Data efficiency experiments: how few demonstrations can still reach a target success rate with good augmentation/regularisation?

Overall this is the kind of project I like: hands-on hardware, fast iteration, and a clear path to deeper Physical AI work without needing a seven-figure lab.